This is the first race in a series of races as I train and prepare for my Boston qualifying attempt on May 28th, 2017.

This race took place on Saturday, October 22nd, 2016, with a start and finish at Surfer’s Point, in Ventura, CA.

For readers who have not been to Ventura, I highly recommend it as both a city to check out and as a host city for endurance events. I have done several triathlons here previously. Most of the events take place right on the water, and it is usually quite cool, even in the summer time.

I was particularly concerned about this race, as I had come down with a sinus infection the previous weekend. I felt under the weather throughout the week, and had missed three different training runs. I stayed late at work on Friday night and got in to Ventura around 9:00 pm. By the time I got some dinner and got settled for the night, it was around 10:30 pm. I stayed in a lovely AirBnB on Friday night that was about a mile away from the starting line. However, I was unable to get to sleep immediately, so when I awoke up at 5:30 am, I estimate that I had a little over six hours of sleep, which was not ideal.

When the alarm rang, I checked the weather on my phone and it said that it was 56º out, and from the sound of the windows rattling around, it was also a little windy. Sadly, I had to leave my phone in my room, as I was by myself and didn’t want to carry it, so I don’t have any pictures to accompany this post.

I ate a Luna bar, drank a bit of water, and jogged the mile to the starting line, having left my AirBnB at around 6:00 am. I signed my waiver and stood in line for my bib number and timing chip. By the time I had all of my paperwork in order, I was actually a little chilly, so I went for another 8 minute jog down the beach.

The race started at 7:00 am, so at around 6:50, I made my way to the starting line. There were no corrals at this event, nor were there elite runners. There were several pacers visible, so I figured I would stick with the 1:40 pacer as my goal pace for this race was 1:38:15.

The organizers were prompt, and the race started at 7:00 on the nose. There was no starting gun or air horn, since they wanted to be quiet for the neighbors. Instead, they had a lady come out and shout “3, 2, 1, GO!!!”. Somehow, it felt a little more personal than an air horn. I was off! My coach had warned me to try and keep the pace between 7:25 and 7:35, and if I was really struggling, to not go slower than 7:45. To heed her advice, I figured I would stick with the 1:40 pace group, but it seems that the pacer was a little over eager, as we completed the first mile in 7:18! It would appear that he realized this and slowed way down, but I kept going, so for the majority of the race I was running by myself.

I neglected to heed my coach’s advice for the next couple of miles. The first part of the race is a loop that goes South for a mile and a half along a road, and then hooks back up with the shore line bike path and heads North to return to the starting line. I believe that this is the entire 5K course. The sun had not yet risen, it was cool, flat, and sheltered from the wind. I felt very strong, and my heart rate stayed in the 160-range, so I figured I was OK.

Turns out, I had made a grievous error in judgement; I had started too hard. Around mile 3.5, the shore line bike bath took a hard right turn, and began to head inland, up the hill towards Ojai. The sun had come up at this point, and I had neglected to wear sunglasses. The worst part, however, was that it was quite windy that day, and as I begin to head up the hill, I was faced with what seemed to be a 20-mph head wind. I slowed down considerably, from 7:25 a mile to 7:37 and then 7:43. I remembered that I couldn’t go slower than 7:45, but I was giving it everything I had to make that pace.

The turnaround was at mile 8. After I came through it, what was once an uphill battle facing a head wind was now a gentle downhill with a tail wind. My pace picked back up again, and I was feeling more confident. However, I tried to remind myself that I shouldn’t go faster than 7:20.

The sun had risen at this point and it was warming up considerably. There was not a lot of shade on the bike path, and around mile 10.5, I started to really feel it. It wasn’t that my heart rate was too high, it was hovering around 172. It felt as if I had ran out of gas, and that my legs didn’t have anything left. I had been munching on Clif Blocks – one every 10 minutes or so, but this time, I ate two, for a little extra kick. After about a half a mile, I felt better, and continued to press on. I was sticking to around a 7:25 pace.

Just after I passed the marker for mile 12, I felt the same exhaustion again, except this time it was considerably more intense. The downhill of the bike path had transitioned into a slight uphill on city streets, and we were now in full sun. My pace slowed down to 8:00, and my heart rate spiked to 180. I was really struggling at this point. Fortunately for me, a lady that I had seen at the starting line and had struck up a conversation with passed me. I recognized her, said hello, and she gave me some words of encouragement.

It was just what I needed to hear. I realized that I had less than half a mile to go, so I gritted my teeth and decided to push as hard as I possibly could. I got my pace down to 7:43, and when I passed the marker for mile 13, I transitioned to as much of a sprint as I could muster.

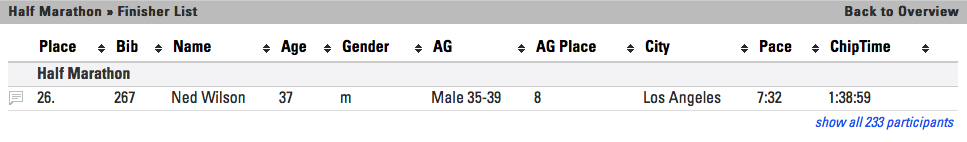

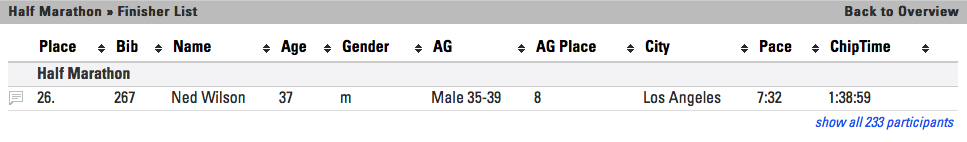

I crossed the finish line and stopped my watch. I had done the race in 1:38:59. This was slightly slower than my goal pace of 1:38:15, but my GPS said that I had gone 13.24 miles, which is a little longer than a half marathon, so in reality, I had actually beaten my goal pace by a couple of seconds. It’s not official, but I’ll take it!

I felt light headed and a little nauseated after I finished, as I had given it everything I had. After I stretched out, got myself some pizza, a little water, and even a free beer and a massage, I felt much better!

I would definitely run this race again. I felt as though the water stops were a little infrequent, but then again, I probably could have done a better job with hydration the night before and the day of.

Here are the official results of my finish:

Here are the nitty-gritty details, from Garmin Connect:

https://connect.garmin.com/modern/activity/1417222576

Thanks for reading!